Generic Trend Classification Engine using Pearson Correlation Coefficient

Trend analysis in my experience is generally done through manual (human) review and exploration of data through various BI tools, these tools do a great job by visually highlighting data that can be of interest to the data analyst, and when coupled with data-mining techniques such as clustering and forecasting, it gives us invaluable and actionable information that can help us further explore and exploit the business or data model at hand. As far as I can tell, the name of the game these days is “exploratory data analysis and mining”, at least in terms of Business Intelligence products on the market and the direction they are taking.

Now as you can tell there is still one weak point in that model of trend analysis, we have a human in the middle orchestrating it. This particular Homo-sapien has to look through the data, and aided by the visualization techniques offered by the BI product they are using, interpret from a bunch of squiggly lines on a graph, or from a histograms and heat maps of the data, how the business should re-act and what actions should be taking to maximize on or tweak a number (or a bunch of them) in your model – in its simplest form it can be increasing profitability for a businesses.

What am proposing here is naive (and raw) model for trend analysis that reduces the dependency on human on-the-spot interpretation of data, and compartmentalizes the problem into discrete units that can be approached and reasoned with independently, essentially what I am proposing is sort of a Trend Classification Engine, in the least it should get you thinking more about what a trend means to the business/data-model, rather than how to spot one, at best this (or similar strategies) should be integrate into your BI solution as a mean of cataloguing and managing the process of trend analysis within a business.

Now before we go any further I would like to state that I am approaching the problem of trend analysis Algorithmically (abstraction of Math) rather than Mathematically (which is not my most strongest suite), what I mean is that the *model* of analysis I present here might be right, but the underlying mathematical tools that I utilize here to reason with the data might not be the best or most appropriate for accomplishing the job, and there might be a better mathematical framework that can be used on the same algorithmic model to yield stronger results (suggestions would be awesome if you have any!).

Discretizing the Problem of Trend Analysis

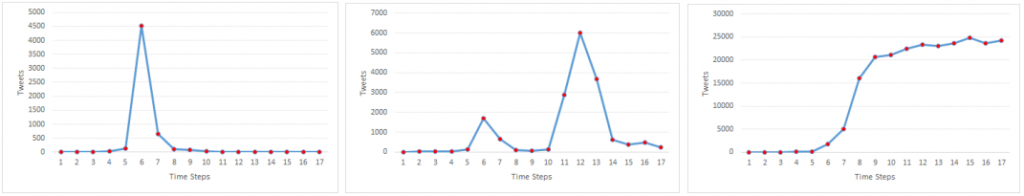

There is a very large (infinite?) number of patterns and trends that could emerge by simply mapping any two values against each other in a data-model. Lets take an example, I deal with online data research so a relatable example for me would be mapping the number of tweets on a particular topic vs time, in an effort to gauge how topics trend on social networks, if you have spent as much time looking at those graphs as I have done, you will soon start realizing that there are some recurring patterns to the shape of the graph. Below you can see 3 examples of trends I have noticed in topic interest on twitter.

It is interesting to note the characteristics of each of these graphs:

- Graph 1: Plateau Trend: This trend has a sharp rise followed immediately by a steep decline, this is generally noticed when you have a trend within a small community of nodes (or twitter users in our case) that fizzles out quickly and doesn’t propagate to other communities, could be due to no interest in the topic outside the community or community isolation (no connectivity to other communities). A good example would be a work Halloween night out, which is generally only of interest to other co-workers within the company (small community), and after the event happens the topic tends to die out quickly.

- Graph 2: The Double Hop Trend: This is a very interesting and handy trend to spot early on, as it could allow you to capitalize on the second hop after identifying the first. There are many reasons why a topic might exhibit this type of trend, one example might be if a topic is trending on a community that has low (but some) betweenness to another bigger community, the first hop will represent the topic trending in the first community, and the second hop will be the same topic trending in another (loosely connected) community, in our case the time steps between the first and the second hop represents the connectivity between the two communities. Some niche topics tends to cross geographical regions using similar double hop patterns.

- Graph 3: The Jump Trend: An example of a typical jump trend is when a company reveals a new product (say “IPhone 5“) creating a steep increase that flattens out after the peak.

Being able to detect if a topic is following a particular trend (trend classification) could be a powerful tool in the arsenal of content publishers, as with financial trading, some content trends will give you a much better return on investment in terms of traffic (and interest) than others.

So in order to work around this large (infinite?) number of trends, we discretize the problem by hand-selecting a collection of trends (or trend templates), these trends will be selected based on frequency of appearance in our data or importance to the business, or even by trend detection algorithm, then each trend will be normalized (0 – 100), categorize, analysed and a list of actions is generated to highlight how to react to this trend. Basically we are creating a filing system of known trends of interest, which if they come up again in our data-model, then we can flag the actions that helps you capitalize on that trend.

Trend Templating

This is actually a very straight forward process, and you could quickly write up an interface that allows end-users and power-users to manage and maintain their own trend templates, all that is needed is a way to draw trends (or provide data points), and a few fields to describe and annotate the trend, there are a few stipulations though:

- There needs to be enough data points in order for the classification to be meaningful, the more data-points a trend template has, the more specific the classification result will be.

- All values in the trend template data-set will be normalized between 0 – 100 to ease the comparison with live data.

- Each template will have an accuracy threshold value, this value represents how correlated the live data has to be to the trend before it is considered to be a match. It is essentially a way of controlling the accuracy.

- Multiple variations of a particular trends could be mapped to the same trend to aid in proximity classification, for example a smoother variation of the above Double Hop trend could be drawn and associated with the same end-result classification (image below).

It can be seen in the graph above that we have:

- X-axis: 17 data points in our trend template, each representing a Time Step (Day in our scenario). Lets call this the Trend Window

- Y-axis: Non-normalized number of tweets with a maximum value of 1,700

Trend Classification using Pearson Coefficient

As with any classification engine, we need our algorithm. Pearson Correlation Coefficient is a measure of linear dependency between two data sets, I will not go into details on how the Pearson Correlation Coefficient behaves (that is what Wikipedia is for), but in essence it returns a single value between -1 to 1 representing the correlation between the two data-sets. I will be using this measure of correlation to detect and classify trends from live data against our trend templates.

The algorithm for this process is rather simple, we need to iteratively go through our live data-set, which may contain hundreds of data-points, looking only at a partial set at a time defined by the size of our Trend Window (the number of data points in our trend template), we normalize the live data and extract the value for the Pearson Correlation Coefficient between our template data-set and the normalized live data-set, we keep doing this as we go along moving one data-point (on the X-axis) at a time on our live data-set.

When a trend is detected within the live data-set, we will start to see a cluster of high correlation values as we move through the trend data-points in our live-data, this clustering of values will look like a bell curve with the highest point representing the best match window of our trend.

If the highest point of match is above our accuracy threshold then we report the trend as identified and return the data-points window along with any annotations and actions.

How to Predict Trends using Trend Classification

Although classifying trends in historical data (against the time dimension) still provides some interesting insight, but predicting a trend as or when it is happening tends to be a lot more useful, since data is much more actionable at the beginning of the half-life of usefulness rather than the end.

A couple of ideas jump out here, non of which are really accurate (prediction never is), but it might help narrow the over-all set of possibilities.

The first idea is to either define a dynamic (per trend template) or static cut-off point in the Trend Window (say consider only half of the data-points), the more data-points you consider the more accurate your prediction will be, but there will be less time to re-act to the trend (since you will be capturing the trend closer to the end of the window).

The second idea is to enlist the help of a Time-Series prediction algorithm, preferably using a model that has been trained and customized to deal with the data at hand, to forecast future data-points which can then be compared to the trend templates using the Trend Classification technique above.

Both ideas have benefits and drawbacks, i’ll leave it to the reader to decide what works best.

Final Thoughts

To sum up, we have described how to build a Trend Classification Engine by combining a simple trend cataloguing solution with a recursive application of the Pearson Correlation Coefficient.

The techniques described here should also inherently provide a framework for data researchers and analysts to share and collaborate their analysis of trends, rather than keep that expertise locked with a few select “experts”.

Oh and I have written this after a two days long hangover and an overdose of coffee, so be very careful how seriously you take all this information.

Leave a Reply

Want to join the discussion?Feel free to contribute!