Troubleshooting a Large SQL Server Transaction Log File

Managing space on your SQL Server Transaction Log can sometimes be daunting, usually if you have a good backup strategy, and a well moderated database (in terms of monitoring and flagging long running transactions), then you will rarely run into unexpected large growth of your transaction log file. But in the real world this is rarely the case, and there will be occasions when you leave a very long transaction running over night (or days), or there is a schedule clash between two queries, or between a query and the backup schedule, which caused a failure in your log truncation strategy.

The daunting part is making sure that you recover from the situation with minimum data loss and disruption to the database’s recovery strategy. In this post I will go through the most common scenarios of transaction log growth, and how to resolve them.

There are a number of factors that can delay the truncation of the log file, these include:

- A backup or a restore operation is running

- Long running transactions

- Database mirroring session is paused, causing a backlog of log activity that needs to be kept in order to synchronize with the mirror database, when the mirroring session is resumed.

You can check out the full list of factors that could delay the log truncation

Before anything else, it is highly recommended that you identify the reason causing the transaction log file to not reuse the existing space and grow, I have written an article on how to identify why your transaction log file is not being reused which goes through the DMV that displays this information.

There are a few alternative options that should be considered before taking any drastic measures, such as killing transactions or shrinking the transaction log file, these include:

- Increase the disk space on the log drive, a good option if you have your servers virtualized with a SAN drive layer.

- If you have multiple transaction log files on the same drive, then scaling out the I/O by splitting the files across different physical disks could help with space issues, as well as improve I/O concurrency access across databases.

- If your transaction log is full, but you still have disk space on the log drive, then you can increase the size of the log file, or set the log file to automatically grow.

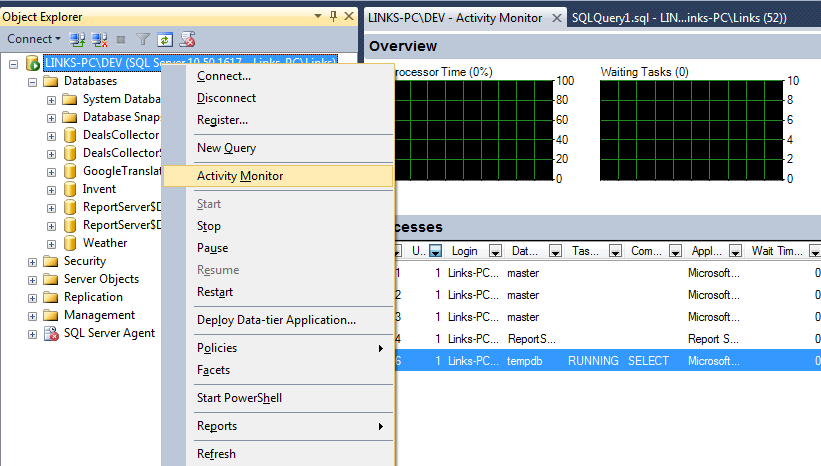

If none of the above is applicable, then the first thing you need to do is check to see what transactions are running. You can do that by right clicking on the SQL instance and selecting the Activity Monitor.

Activity Monitor will show you all active processes currently running on each database in the selected SQL instance, along with useful metrics such as wait type, wait resource and wait time. Using activity monitor you will be able to determine if there is any troublesome queries that should be killed. By killing the query you will be rolling back all changes since the last time the transaction committed to the database.

Look for a value of 1 in the “Head Blocker” column of the Processes list of the Activity Monitor interface; this could be in the database with the large transaction log, or transactions held in tempdb or master databases that are related to the database with a large log file.

The reason you need to kill long running transactions is to allow the database to reach a CHECKPOINT, this is because SQL Server cannot truncate a chain of log records without the transaction involved in this chain of records has finished, this is called the MinLSN value (or Minimum Log Sequence Number), this number is calculated by checking what transactions are still running since the last CHECKPONT. For more details on how this is calculated, you can visit the CHECKPOINTS & Active Log MSDN page .

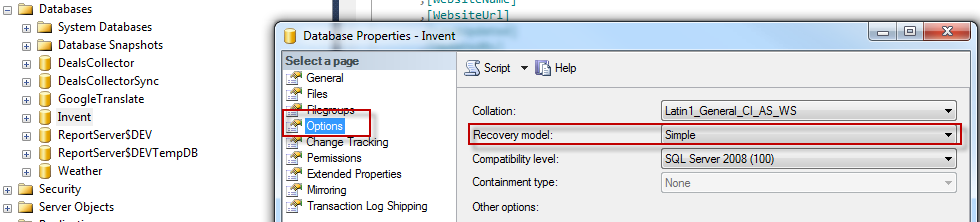

Then you’ll need to check the database’s recovery strategy, whether it has been set to Full Recovery mode or the Simple Recovery mode.

You can do that through SSMS, by right clicking on the database in the Object Explorer menu and selecting the “Properties” menu, then selecting the “Options” page.

Shrinking Transaction Log when Database is in Simple Recovery Mode

If your database is in Simple Recovery, then after you have killed all active transactions in the database, you’ll need to take a full database backup. After the backup operation is complete, and since all transactions are either rolled back or committed, SQL Server should technically truncate the log file. This is true if you don’t have any other factors affecting log truncation.

If the log file remained large, then you will need to issue a shrink file command on the log file, which looks like this:

DBCC SHRINKFILE(<log-file-name>, <file-size-in-MB>)

ALTER DATABASE <db-name> SET MULTI_USER WITH ROLLBACK IMMEDIATE

After running the code above, it is sometimes recommended to take another backup of the database.

Shrinking Transaction Log when Database is in Full Recovery Mode

If you’re database is in Full Recovery mode, then you need to be very careful not to lose point-in-time log recovery by shrinking the log file, without having proper backup of this data. After killing all long running transactions, the first thing you need to do is take a full backup of the database, then you will need to take a transaction log backup.

After performing the backup operations, and if the log file size still haven’t shrunk, then I really recommend contacting your DBA to check and see why the log file is not shrinking, this could relate to a paused mirroring session, or any of the reasons mentioned above at the start of the post.

If you are the DBA, or you are really certain you want to shrink the log file, you can issue the following command:

SQL Server 2008+

ALTER DATABASE <db-name> SET RECOVERY SIMPLE WITH NO_WAIT

DBCC SHRINKFILE(<log-file-name>, <file-size-in-MB>)

ALTER DATABASE <db-name> SET RECOVERY FULL WITH NO_WAIT

ALTER DATABASE <db-name> SET MULTI_USER WITH ROLLBACK IMMEDIATE

SQL Server 2005

BACKUP LOG <db-name> WITH TRUNCATE_ONLY

DBCC SHRINKFILE(<log-file-name>, <file-size-in-MB>)

ALTER DATABASE <db-name> SET MULTI_USER WITH ROLLBACK IMMEDIATE

As well as stopping point-in-time log recovery, the SHRINKFILE command also causes fragmentation of the file system, which could cause I/O implications if not handled as part of the server maintenance procedure.

It is crucial that you check with your DBA before you do any of the above.

I have also collated a list of resources that could help you deal with an oversized transaction log file in SQL Server:

- Microsoft Support’s article on how to recover from a full transaction log file

- SQLServerPedia’s article on truncating transaction log (for SQL 2005)

- MSDN’s article on shrinking the transaction log file (SQL 2008 R2)

Troubleshooting a Large SQL Server Transaction Log File rated 4 out of 5 by 1 readers

Leave a Reply

Want to join the discussion?Feel free to contribute!