Architectures for Running SQL Server Analysis Service (SSAS) on Data in Hadoop Hive

Recently I have been involved in researching and building a low-latency high-data-volume OLAP environment for a social entity and interaction analysis platform, the perfect mixture of concepts such as Big Data collection and processing, large-scale Network Analysis, Natural Language Processing (NLP) and a highly scaled-out OLAP environment for end users to explore and discover data (essentially a Self-Service and Exploratory BI layer).

It is by all means not an easy mission to orchestrate all the technologies that back those concepts, particularly if you are interested in using the optimum solution for the problem at hand, for example Big Data might be better handled by a Hadoop layer, but Hadoop or Hive (at least on their own) are not geared up to respond to OLAP queries, which are real-time by nature, and even if they were, your end-user needs familiar tools and interfaces to analyse and study this data, which is where SQL Server Analysis Service and the whole Microsoft BI stack might come in and offer great integration with already existing business applications (such as Office or SharePoint).

This post discusses a few architectural approaches to exposing a Hadoop layer through a SQL Server Analysis Service (SSAS) interface, with references to data-latency, redundancy and over-all performance.

The reasons for coupling Hadoop and Hive, a large scale distributed data processing and storing engines, with SSAS, a powerful data modelling and mining platform, is pretty clear, each of these technologies excel at a different layers of the over-all data delivery architecture.

When I refer to Hadoop in this article I am not going to discriminate regarding which Hadoop technology or implementation the proposed architectures apply to, but I have tested these configurations on 2 Hadoop implementations: Microsoft’s HDInsight cluster (local cluster and the Hadoop on Azure clusters, which are now in CTP), and a native installation of Hadoop and Hive using the latest stable version from the Apache website on a CentOS operating system, and the setup worked fine for both, albeit Microsoft’s HDInsight is much slower in terms of performance and not as extensible with many supporting projects as the Apache native Hadoop cluster.

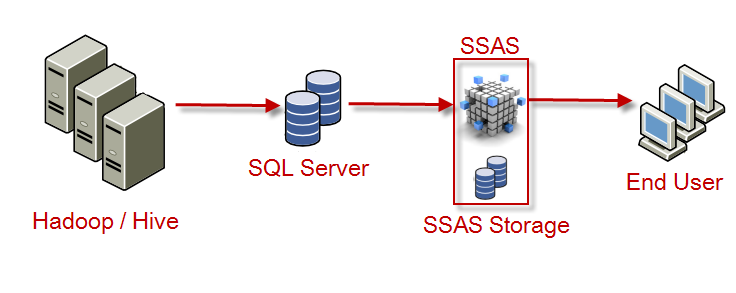

Standard Hadoop into SQL Server into SSAS Architecture

I call this design “standard” because you are essentially moving data from the Hadoop/Hive cluster environment and into SQL Server, before finally loading the data into the SSAS cube, as shown in the diagram below. There are abundance of drivers to connect to Hive, issue queries and push results into SQL Server, you could also potentially bypass Hive and write output files in Hadoop directly that are geared for SQL Server Bulk Import (BCP), which should improve the ETL into the SQL Server layer.

This architecture is particularly useful if the data in Hive is not suitable to be directly queried by Analysis Services without prior “relationalization” and cleansing through SQL operations, and implementing any changes to how the data is stored in Hive is not a viable option.

Architecture Diagram

3 different copies of the OLAP data will be available and maintained within the architecture, this poses difficulty in terms of data management and governance, which imposes large administration over-head of the data stack.

Data Footprint

High; Data will be duplicated in Hive, SQL Server and Analysis Service. Make sure you size up correctly the amount of space required to accommodate all this data in each layer of the system.

Latency

High; There is a large number of ETL processes that need to occur before data becomes live and available in SSAS, this includes acquiring and processing the data into Hive, moving the data into SQL Server, then finally reprocessing the SSAS cube and making it available for access. This architecture might be better suited in situations where data latency is not of paramount importance, and real-time (or near real-time) data availability is not a business requirement for the solution.

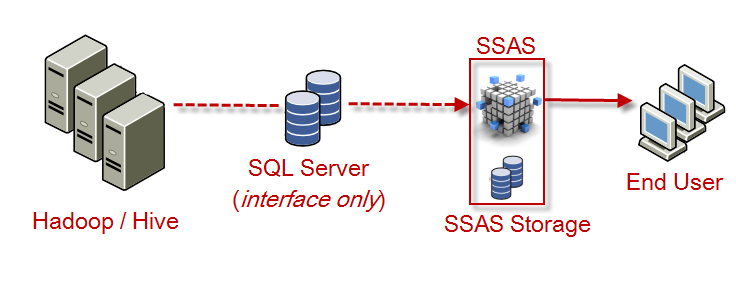

All Data in Hadoop, OLAP Data in SSAS

In this scenario data will be pushed directly from Hive to SSAS, with SQL Server only acting as a data interface layer, as shown in the diagram below.

SQL Server does not hold any data, and is only present in the architecture as a middle-man because of its ability to established a Linked Server connection to Hive using Microsoft’s HiveODBC driver, which allows SQL Server to present Hive data through its interface (by essentially creating VIEWs based on SQL Server OPENQUERY commands).

Of course the whole SQL Server layer could be bypassed completely, and ETL into the SASS cube could be done programmatically through Analysis Management Object (AMO), what makes this approach really cool is that you could essentially write directly to the cube using Hadoop’s Map/Reduce stage, before the data even reaching Hive, leading to further improvement in data latency.

This architecture have been tried and tested, Klout has released an excellent paper (SQL Server Analysis Services to Hive) detailing their implementation of this architecture. Totally a must read!

Architecture Diagram

Data Footprint

Medium; Although data is no longer stored on SQL Server, there are still two duplicating versions of the data that lives in Hive and SSAS.

Latency

Medium; In this scenario the SSAS cube will directly process data from Hive, the data will become available as soon as the cube completes processing. This brings the latency figures downs, but we are still dependent on cube processing rate, which on very large cubes can be a considerable figure.

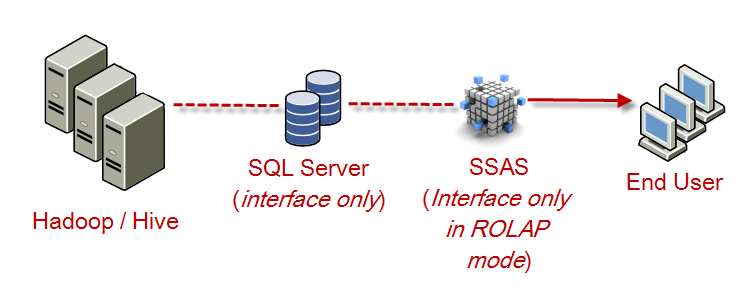

All Data in Hadoop, SSAS is only an Interface

This is pretty much the same architecture as before, except that the SSAS cube dimensions and measure groups are setup in ROLAP mode acting only as a data model, which essentially means all queries to the cube will be satisfied by the Hive storage engine. This does mean that Hive needs to be geared to satisfy OLAP and real-time queries, and there are a few projects and Hadoop optimisation techniques out there that tries to achieve this level of performance, turning Hive from a batch processing engine into a real-time analysis engine, these projects include:

- Impala from Cloudera: Impala is an improved Map/Reduce engine that operates ontop of Hive, it accepts HiveQL and implements a subset of the Hive vocabulary (which is constantly growing), Impala claims to offer 30X average improvement over standalone Hive queries. Cloudera offers a Virtual Machine that demonstrate CDH and Impala.

- Spark & Shark for Hadoop: These are two powerful technologies that work together to offer around 100X improvement over native Hive queries, Spark is a very cool Map/Reduce implementation with Java and Scala APIs, and Shark is essentially an in-memory data view storage engine.

- The Stinger initiative from Hortonworks: This is a Hadoop incubation project that aims to bring the Hive engine up to date with concepts such as adhoc queries, real-time analysis, data exploration and other emerging BI concept, I really recommend keeping a close eye on this project.

Architecture Diagram

Data Footprint

Low; Data is stored in one place within the architecture, and SSAS is only an interface for data modelling, pushing all queries back to the Hive engine.

Latency

Low; Since there is no ETL into SQL Server involved or SSAS cube processing (cube is in ROLAP mode), as soon as the data is made available for querying in Hive it becomes available for querying through SSAS.

Final Thoughts

Although this article mainly deals with SSAS, there are many other technologies out there that allows users to create and share Hadoop driven data models outside of the Microsoft technology stack, for example Tableau is a powerful visualization and data exploration tool that offers drivers to connect to various implementations of Hive, and Cloudera offers drivers to allow MicroStrategy BI tools to communicate with Hive.

Excellent article by Chris Webb on how to connect Impala to Excel PivotTables.

Good article, thanks!!!

Thomas

This post is amazing. I realy like it!

Excellent group of SSAS Hadoop articles!

I am a total Hadoop noob and I was struggling with getting tables I have created within Hive to show up on the linked server side. I assume this is something with security but I cannot figure out what settings are ncessary. Thoughts or links I could read up on would be appreciated.

Thanks AJ!

I would recommend doing a search for a how-to guide on how to set up a link between Hadoop and SQL Server. Otherwise, if you can let me know the specific problem you are having, I can try to provide some advice.

Thanks!