In-Memory (Memory Optimized) Tables in SQL Server 2014

In-Memory storage technology finally make their debut appearance on the SQL Server 2014’s BI stack, with the creation of a proper memory optimized tables and stored procedures, unlike the Columnstore feature which offers a read-only memory optimized solution, that does not work overly well in a true transactional environment.

In this post I hope to dissect the new In-Memory tables feature of SQL Server 2014, providing an overview of how the technology works, how to create in-memory tables, maintain them and any pitfalls to watch out from. Mainly though, I am writing this as a reminder to myself of the latest articles I have been reading about this cool new feature.

So lets begin with an obvious question

What are Memory-Optimized Tables?

Memory-Optimized tables are fully ACID compliant data storage structure, which stores it’s data mainly within the system’s memory, allowing users rapid access to data stored within the In-Memory structure in a transactional (OLTP) environment; which means users can read and write to this data structure without running into transactional consistency issues. This feature has been achieved by SQL Server 2014 through taking the following approach:

- In-Memory Row-Versioning: Memory-Optimized tables maintains various versions of the same row dispersed over the table’s in-memory data structure, this allows the reading and writing of rows concurrently without transactional inconsistency issues. That being said, and as with the SNAPSHOT ISOLATION LEVEL, row-versioning handles conflicts by throwing an error to the application, expecting the application to implement some sort of retry logic for failed transactions.

- Data durability through:

- Transaction Log: Logging to the database’s Transaction Log is a disk-based operation, and so this might become a bottleneck in a highly transactional In-Memory environment.

- Checkpoint and Delta files: placed on a special FileGroup for Memory-Optimized storage, these files are written to sequentially (and so optimised for spinning-disk), and are used mainly during SQL Server start-up to load in the Memory-Optimized tables in a database.

Creating Memory-Optimized Tables

So lets assume that you have a SQL Server 2014 test environment, with a database called sql2014, we will use this database to create a Memory-Optimized table by implementing the following steps:

Step 1: Create Memory Optimized FileGroup

USE [master] GO ALTER DATABASE [sql2014] ADD FILEGROUP [mod1] CONTAINS MEMORY_OPTIMIZED_DATA GO ALTER DATABASE [sql2014] ADD FILE ( NAME = N'sql2014_mod1', FILENAME = N'C:\sql2014\sql2014_mod1' ) TO FILEGROUP [mod1] GO

Remembering to replace the FILENAME parameter in the code above with your own local file path.

It is interesting to note that the Checkpoint and Delta files, used for maintaining Memory-Optimized table operation, are organized in a similar manner to a FILESTREAM FileGroup, and is in fact considered of type FILESTREAM when adding the new Memory-Optimized FileGroup to a database File.

Step 2: Create Memory Optimized Table

Creating an In-Memory (Memory-Optimized) tables in SQL Server is pretty straight forward.

USE sql2014 GO CREATE TABLE dbo.MemOptTable ( tableId int not null primary key nonclustered hash with (bucket_count=2000000), naturalId int not null index ix_naturalId nonclustered hash with (bucket_count=1000000), value nvarchar(100) null ) WITH (MEMORY_OPTIMIZED=ON, DURABILITY=SCHEMA_AND_DATA) GO

Durability options:

- SCHEMA_AND_DATA (default): This option ensures that data is recovered to the Memory-Optimized table when SQL Server is restarted, or is recovering from a crash.

- SCHEMA_ONLY: Like Tempdb data, the SCHEMA_ONLY bound Memory-Optimized table will be truncated if/when SQL Server is restarted or is recovering from a crash, but unlike the tables in Tempdb, the Memory-Optimized table will be re-created as a blank table at the end of the restart/recovery operation.

Indexing Memory-Optimized Tables

Memory Optimized tables support two types of indexes:

- Non-Clustered Hash Index: This is a memory optimized index, it does not support inequality operators as well as sort-order matching operations.

- Non-Clustered Index: This is a disk based index, fully supports all normal index operations.

There are a few stipulations for creating indexes on Memory-Optimized tables:

- Only 8 indexes are allowed on a Memory Optimized table

- Indexes cannot be added to a Memory Optimized table, instead the table has to be dropped and re-created with the new index.

- Primary Key is a requirement for Memory Optimized tables.

- All indexes are covering, which means they include all columns in a table.

- Indexes reference the (hashed) row directly, rather than referencing the Primary Key.

Querying Memory-Optimized Tables

Memory-Optimized tables supports access through T-SQL and Natively Compiled Stored Procedures. Generally, you will require a SNAPSHOT isolation level or higher in order to access a Memory-Optimized table. There is an excellent article on MSDN highlighting features that are supported by Memory-Optimized tables.

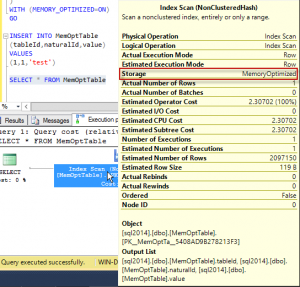

As with Columnstore, the Execution Plan for an In-Memory table shows the Storage attribute holding a MemoryOptimized value, as shown in the image below.

It is important to note that In-Memory tables leaves the burden of handling transactional conflicts to the application; this means that if a transaction fails, say due to attempting to update a record that has already been updated by another yet uncommitted transaction, then SQL Server’s engine will through a 41302 exception (error) to the application that started the transaction. Microsoft currently suggests implementing retry logic in applications accessing In-Memory tables, particularly ones that are running on a high OLTP databases, since conflicts are more likely to occur in a high throughput or high contention environments.

Maintaining Memory-Optimized Tables

- Writes can be as slow as your Transaction Log throughput: Which might be the bottleneck in the performance of your Memory-Optimized table, it is recommended to place the Transaction Log on fast access storage, such as Flash memory, to coupe with the throughput of the database containing the Memory-Optimized table.

- In-Memory update-able indexes influence some fundamental SQL optimization concepts with regard to data writes; since memory is optimized for RANDOM data access, a sequentially updating table index (such as ones that utilizes an IDENTITY(1,1) column) might not be the optimal option, considering that adding a new data point to a sequential index will always require a lock on the same parent node in the index’s B-Tree structure, instead of locking a random parent node within the B-Tree, which is what happens when the indexed columns is not a constantly increasing (or decreasing) identity column. This fundamentally shifts how optimization is conducted compared to disk-based tables.

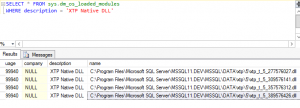

- The sys.dm_os_loaded_modules DMV can be used to identify the DLLs supporting the operations of In-Memory tables (and NATIVELY_COMPILED stored procedure) objects, these DLLs are loaded into memory while the In-Memory table is operating.

- Altering the definition requires droping and recreating the Memory-Optimized table, this involves stopping data operations, generating change scripts for the table and schema bound objects (for example Natively Compiled SPs), unloading of data, dropping and recreation of the table and schema bound objects, and finally reloading the data. A wee bit long-winded if you ask me, also makes it difficult to sneak an unnoticed update on the live environment without anyone suspecting anything! :).

Statistics Maintenance on Memory-Optimized Tables

Statistics behaves slightly differently on In-Memory tables compared to disk-based tables, there are a few things to remember here:

- Statistics are not updated automatically, it is recommended to setup a manual regular statistics update operation on your Memory-Optimized table.

- sp_updatestats always runs a statistics update, unlike disk-based table were sp_updatestats only updates statistics if there has been modifications since last run.

- Statistics update must always be specified as FULLSCAN update, rather than SAMPLED.

- Index key statistics are created when the table is empty, it is recommended to always update statistics after ETL.

- Natively Compiled stored procedures need to be recreated when statistics are updated, this is because execution plans for Natively Compiled stored procedures consider statistics only once, when the SPs are created.

Resources

- Getting Started with SQL Server 2014 In-Memory OLTP by SQL Server Blog team

- Intro into Memory-Optimized Tables on MSDN

statistics are important to be updated regularly, to ensure optimized query execution